Review: Lattice Theory of Information

Published:

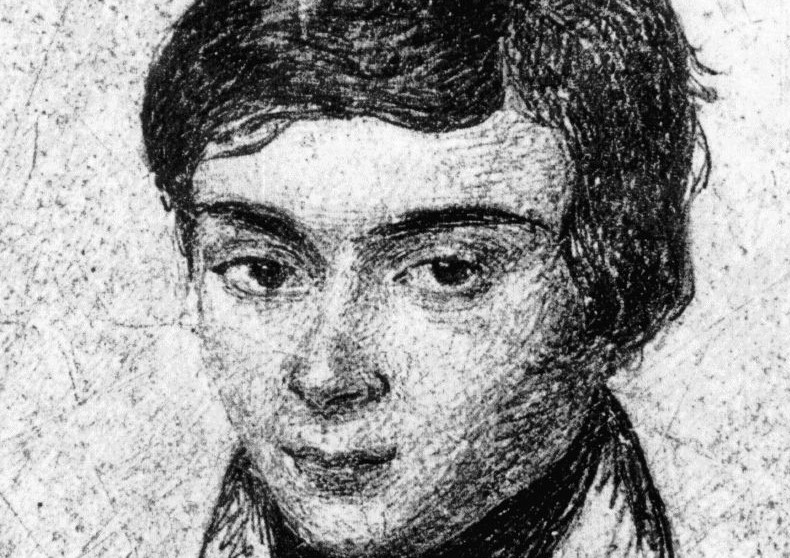

Claude Shannon, known as the father of information theory, is little known for his contribution to none other than…lattice theory. In this paper, Shannon introduces a metric lattice of discrete stochastic processes, but his information lattice makes sense in any setting where conditional entropy is well-defined. This paper is very short, only a half-dozen pages, but contains a numerous amount of insight into the ultimate question in his field: What is information, actually?

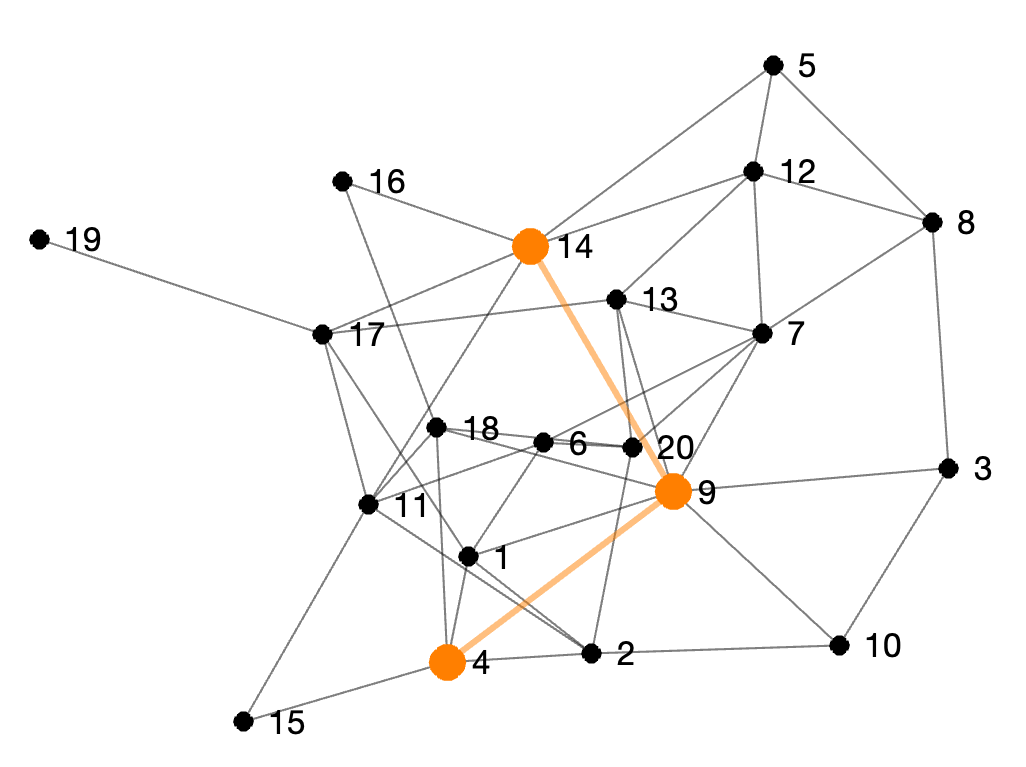

In the abstract of his paper, Shannon suggests that these sorts of lattices may be useful in tackling, what is now widely known as, the network coding problem which Shannon describes as a “communication system consisting of a large number of transmitting and receiving points with some type of interconnecting network between the various points.” To my knowledge, network coding via lattice theory is still an open problem.

This paper, written before the advent of computer typesetting, was writen in the old style: typewritter and handwritten mathematical symbols. As a convenience to others who want to enjoy this unusual piece of mathematical history, I have retyped his original paper. I did not take any editorial liberties, maintaining his original notation throughout. However, I did annotate the paper with a plethora of notes to help the reader along the way.

Enjoy the paper: The Lattice Theory of Information

Reference

Shannon, C. (1953) The lattice theory of information. Transactions of the IRE Professional Group on Information Theory, 1(1), 105-107.